Short Answer is “It depends, but usually yes. And you probably should” Long answer follows:

Laser Power

The length of a fibre optic cable run is primarily determined by whether a strong enough laser signal is received by the Gigabit transceiver, commonly known as an SFP. Although there are other considerations such as modal dispersion and signal integrity for signal propagation, lets choose to keep this simple for now

When the IEEE creates the standards, part of the technical considerations is the strength of “laser launch” or transmitter, and the sensitivity of “laser receiver”. Both of these items are measured in terms of dBM. For 1000BaseLX/LH using a single mode laser:

1000Base LX/LH Gigabit EthernetNote that for 1000BaseSX the figures are significantly different. this is why the shorter cable length comes into play, less power budget to work with, but in a given situation we may well be able to exceed the standard and get away with it.

10000BaseSX Gigabit EthernetNote that different vendors display their min/max figures differently e.g. Cisco documents the max/min values like this for 1000BASE-SX:

Transmit Power Range -3 to -9.5 dBm

Receive Power Range 0 to -17 dBm

The variation could be due to many factors such manufacturing process, quality control, quality of materials and so on. Manufacturers contribute to the standards, usually, so that they can ensure they are able to make products that fit the standards.

Power Sums (with Math)

Now from this we can deduce that the worst case scenario would be when the launch power from the single mode launch laser is transmitting at the lowest possible power of -9.5dBm and the receiver sensitivity is at least sensitive of -20dBM which gives us a worst case of 11.5dBm of “headroom” for guaranteed function. If the power level at the receiver was less than this, then there are no guarantees that your receiver could decide the serial signal correctly.

That is, once the received signal drops below the power level you might be screwed.

In any given circumstance, launch power is affected by the quality of the fibre connector and the laser installed, not much we can do there. Receiver Sensitivity is determined by the amount laser power received at the other end, which is degraded by patch leads, fibre length, fusion splice or mechanical splice, types of connectors etc.

Loss on Fibre Optic Path

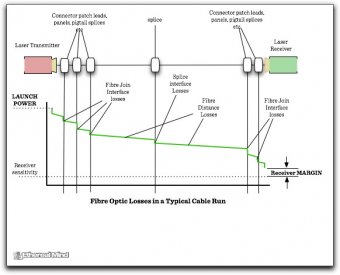

In a perfect case, the laser output of the SFP would be directly connected to the receiver. The use of a fibre optic cable causes loss of the light wave. The laser signal is attenuated by the cable itself, each connector from the SFP to patch lead, the patch lead to fibre backbone. The fibre backbone might consist of several splices or joins in the glass core as well as shown in this diagram:

In short, every break in the fibre core causes some type of loss. Losses for fibre are quite small in most circumstances.

Does it work ?

The interesting thing is that even on cabling where there is more than 11.5dB of loss, then your connection may well work because you might have good launch characteristics i.e. up to -3dBm of launch giving up to 6.5 dbM of headroom. Potentially, the receiver could work on an even lower input power. Thus the recommended length of 550m for 1000BaseLX on 62.5um multimode could be longer depending on how good the end to end performance of the cabling system is.

However, the loss in the cable can vary over time since loss can fluctuate with temperature and other environmental issues, and this may cause intermittent errors. The gamble with overrunning the standard lengths is that we risk intermittent and indeterminate faults, often with no apparent cause.

So yes, it depends

The best way to run long cables is to use a fibre optic cabling test that measures the end to end loss, including path leads. But you can vary this to your own requirements.

In most cases, the cabling contractors have oversold the requirements for fibre cabling and forced most customers to use fusion spliced backbone cabling which has less than 0.1dBm loss per connection when mechanical splicing would be cheaper and faster to complete. Even on a big campus with 2 kilometres run of single mode, it’s unlikely that you would incur enough loss to be a concern (although model dispersion might then be a concern – but that’s another story)

But it’s not guaranteed so you need to consider whether even this very low level of risk. In my view, you can easily double the length of fibre cabling runs with good quality transceivers provided that you test the installed cabling.

For educational campus, I would certainly recommend this practice since it would save a huge amounts of money in otherwise useless switch purchases to act as signal regenerators.

Remember, the IEEE is a zero risk, backward looking, nothing can change too much and don’t rock the boat organisation. Conservative doesn’t even begin to describe their approach to physical transmission performance.

YOU MIGHT ALSO LIKE